Sci-fi authors are often overly optimistic about the pace of breakthrough discoveries—even the dystopian ones. Only one exception comes to mind: Aldous Huxley set his most famous novel, Brave New World, in the mid-26th century. The book is worth reading, but that’s not my main focus today. According to a bunch of well-reputed authors like William Gibson, Isaac Asimov, Ray Bradbury, Arthur C. Clarke, or even their predecessor Jules Verne, we should already be driving flying cars or enjoying interstellar travel, accompanied by intelligent and autonomous robots in spaceships run and navigated by super-intelligent computers. And they are my topic for today.

2001: A Space Odyssey trailer. Read the book though!

Remember HAL 9000 from Clarke’s 2001: A Space Odyssey? Yes, that should have taken place two decades ago—how optimistic! If you've only seen Kubrick’s movie, give the book a shot; the computer’s motivation is more apparent there (and books are always better anyway). In classic sci-fi, artificial intelligence is actually self-aware intelligence. The AI models we commonly use today are no match for that—they’re basically mimicking thinking (still better than many people can do, though). HAL seems to be halfway there, but it stands autonomous.

For those who haven’t read the brilliant book, HAL has two directives that eventually conflict. He needs to ensure the mission is completed, meaning the monolith near Jupiter is investigated. However, the crew has no clue about it, and HAL is forced to lie or at least avoid answering. The other directive is quite simple—protect yourself. At a certain moment, HAL has to choose between eliminating the crew, which could result in the mission failing, or letting the crew shut him down, likely resulting in the mission failing (as the crew was not aware of the true objective), and a real possibility of never being turned on again. His decision is not the focus of this post, and I’ve spoiled enough. If you haven’t read it, do so, and then come to discuss ;)

The Three Laws of Robotics

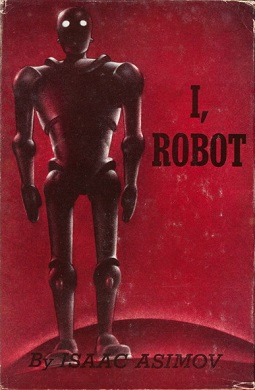

Such a brilliantly depicted slide from geniality to insanity couldn’t happen in the books of another titan of science fiction, Isaac Asimov. About 20 years earlier, Asimov published a book of short stories, I, Robot. Putting the literary quality aside, it contains a remarkable yet simple set of laws every artificial intelligence should adhere to—the famous Three Laws of Robotics:

- The First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- The Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- The Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Quoted from Wiki, originally from I, Robot

For those who spotted the missing “to” in the Second Law, it is the author’s intention—I merely copied it as is. If only HAL had been programmed with these laws, the crew of the Discovery One might have survived (yes, I know they’re just characters) and eventually reached the monolith, guided from Earth. And the entire Space Odyssey series would have been... well, boring.

AI as the Decision Maker

Anyway, our mimicking AI is replacing people in low-impact positions, such as Customer Care. Your emails or calls can be handled by it just as well as if you were attended to by a human but in no time. Fair enough. There’s pressure to find more use cases for it. Startups aim to launch AI doctors, AI attorneys, or similarly skilled positions. As we briefly discussed this topic with @taskmaster4450le, we can imagine AI judges presiding over hearings and even deciding cases. Who could be more impartial than a large language model (that's what the current AI actually is)? Who else has no emotions, feelings, or affinities whatsoever? Not even the most autistic child!

Yet there’s always the HAL scenario hanging over us like the Sword of Damocles. Something can always go wrong, even if the basic programming says only two ground rules: “Be impartial” and “Adhere to the law.” At some moment, these two can contradict each other, and I doubt the AI judge would show the Blue Screen of Death on its console and reboot.

I don’t think sci-fi authors, renowned or not, are overly accurate in predicting the future (which is often already the past from our point of view), but there’s a spark of a brilliant idea in many books. The Three Laws of Robotics shine like a supernova, and we should definitely implement them in all AI models we are about to use. If your chatbot starts hallucinating, nothing really happens. Not even if it goes rogue since you likely only use it as an assistant. Yet. Let’s talk about it in a year, though.

This happens to be my #augustinleo day 24 entry. Feel free to join the challenge with own genuine long posts!

Posted Using InLeo Alpha