For most people, I'd agree, no need to really chase upgrades. I used to, but I stopped that, but I depend on my machine to do my job. Most people, unless you game, do ai, or develop, I'd usually just recommend a $300-400 mini pc, they are so great these days. I have a cluster of mini pcs running 60-80 docker containers and a few vms at any given time with sub 3 minute fail over.

My old system wasn't slow by any means, I just didn't want to deal with power supply issues upgrading to a 5090. There were games I play that the 3090 was just not handling as well as I would like.

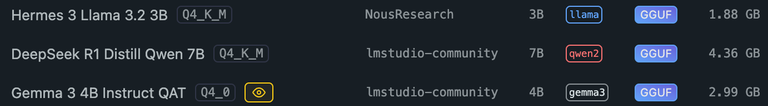

What models are you using locally? I found most local models just suck until you can get into the few hundred gb of vram and run something like Deepseek or Qwen3 235B with high quants. It really depends on what you are doing though, I find Claude to be my goto for most things but I try to offload smaller tasks to Qwen. Llama is such garbage though. Hunyuan just came out and getting a lot of good press and is a small model considering, some are saying it is on par with Qwen3 235B, but I haven't done testing yet.

I hear good things about Bazzite, I haven't tried it. I went through a lot of distros a while ago until I settled on Arch. Arch is amazing, I've made some changes that make things really handy. For example I have a hook into pacman to save my packages to a text file so I always have a list handy as well as name BTRFS snapshots before and after.