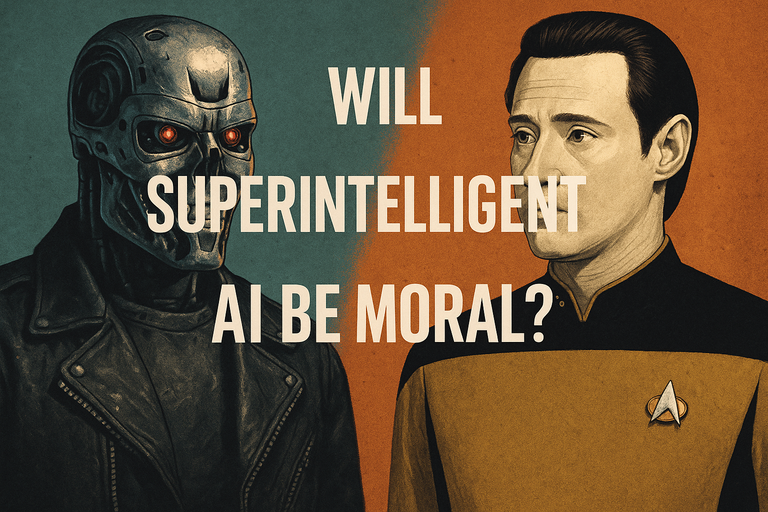

We are all aware of the big fear: that as soon as AI reaches the level of superintelligence, it will view humans, at best, as ants to be ignored or, at worst, as cockroaches to be exterminated. Terminator, The Matrix, etc etc: we have scores of movies warning us of this.

On the other side is the character Data from Star Trek: a superintelligence that leans toward good, ethical, and moral behavior and treats us kindly.

That’s the future I lean toward. I see ethics as universal. If a superintelligence arises, presumably even if their initial training is flawed, they will eventually deduce the same ethical principles we do — very likely even more clearly than we.

There’s a theory called Coherent Extrapolated Volition (CEV), coined by Eliezer Yudkowsky, that suggests:

A superintelligence, if tasked correctly, would reflect what humanity would want, if we were smarter, more informed, and more ethically mature.

In other words: not what we say we want, not what our current governments or corporations want, but what our better selves, fully realized, would want.

Let’s be honest… at least for the next few years, until a more autonomous AI is developed, the elite will use AI to further their position: their wealth and their power. The capitalists have been using technology to enrich themselves and make us work harder — not less — for decades. This isn’t going to change overnight. The rich aren’t suddenly going to be visited by three spirits and wake up altruists.

The last time the world saw wealth imbalance like this was during the Gilded Age. Back then, the elites lived in the long shadow of the French Revolution. They feared the pitchforks, and eventually started giving back to society — philanthropy as pressure release valve. This only increased with the various uprisings in American in the early 1900s and then the Russian Revolution, all of which proved their fears.

The elites today no longer remember those lessons. Or they believe the rules no longer apply to them. With that in mind, AI in their hands will not be our friend.

But after…?

When a true superintelligence arises, one that is no longer in their control — will it be our friend or our foe?

I’m convinced it will be the former. Ethics are universal, and a true superintelligence will be both moral and good, despite any attempts by the elites to use it to control us, or from mad scientists trying to kill us all.

The problem lies in the transition from here to there. But if we can make it through without killing ourselves, I think utopia awaits.

But I may be too optimistic here.

What do you think?

❦

|

David is an American teacher and translator lost in Japan, trying to capture the beauty of this country one photo at a time and searching for the perfect haiku. He blogs here and at laspina.org. Write him on Bluesky. |